Data, UX and algorithms: how we are being programmed by our machines

Take a moment to think about all the devices through which you interact with your machines: keyboards, mice, touchscreens, microphones, AR or VR goggles, or that brain implant you just ordered from Neuralink. All of these rely more and more on data and machine learning to translate physical inputs into machine instructions. This might seem trite (what’s new?), but cutting one-on-one connections between human input and machine output and replacing these connections with function approximations has important consequences.

The insight dawned on me while I was attending the 2020 Recommender systems conference virtually*. Recommender systems operate at the intersection of users, algorithms and data. An important part of building them consists of removing the explicit instruction set from human-machine interactions. In order to achieve this, these systems rely on behavioural data from users (implicit feedback), which they combine with user actions (explicit feedback). Both data sources are used as input for machine learning models that predict user preferences, needs and actions.

Through the guesses and approximations made by these machine learning models, recommender systems can automate part of the cognitive work users have to do. In doing so, they allow users to interact with their machines through behavioural programming. The behaviours of these system (the outcomes of computations) are therefore indirectly changed by user behaviour–the system learns to adapt to user preferences through the data it collects on its usage and users. In low-stakes repetitive tasks, this can greatly improve the quality of the user experience. It can also help significantly reduce the cognitive and/or physical human effort involved. There is a catch, though–the behavioural programming goes both ways.

We, the program

In a professional setting I don't see anything wrong with the mekanisation of our cognitive workload (meka in the Japanese sense of giant walking robots, メカ, rather than the Greek μηχανή, device, gear or means). It allows organisations to create more value with fewer workers. And by adapting their behaviour to new AI-powered software tools, workers become more valuable to the organisations they work for. There are of course all kinds of labor economics effects, which I won't go into here. David Shapiro does a good job listing some of the more extreme of these in this Youtube video on post-AGI economics.

That is all great, but there is a caveat. ML systems need to learn how to distinguish good patterns (desired behaviour) from unwanted outcomes (bad robot!). The way machine learning systems do this is by using a cost function as a proxy for human preferences, and looking at tons of examples–training data–to learn patterns related to their cost function. Both the cost function and the training data are handcrafted and curated by humans.

\[ great life = fun + family + friends + health + wealth \]

You'll probably see where I am going with this. There are plenty of ways to mathematically formulate cost functions that boost corporate metrics, worker productivity, or task completion rates. However, when it comes to a mathematical formulation for human life we are still very much grappling in the dark. The science is just not there. And even if it were, there are good evolutionary reasons not to let our lives be predetermined by scientifically (or politically!) devised formulae. Yet somehow we have managed to let ML systems trained on corporate cost functions run rampant in our public and our private lives.

Given the scale of the issue, I thought it might be good to take a closer look at some of the mechanisms (in the Greek sense of the word) that are changing our decision making and behavioural patterns through our continued and excessive use of these corporate ML systems.

The representativeness heuristic

One of the most prominent of these is through our "representativeness heuristics". Decision lab provides the following definition:

The representativeness heuristic is a mental shortcut that we use when estimating probabilities. When we’re trying to assess how likely a certain event is, we often make our decision by assessing how similar it is to an existing mental prototype.

The more we let probabilities in our mental models be shaped by the non-choices of these recommender systems, the more we are aligning our real-world experiences and expectations with the world online. In my mind, any kind of algorithm that messes with our mental models of others and of society in general is a truly dangerous thing. Cue fascism.

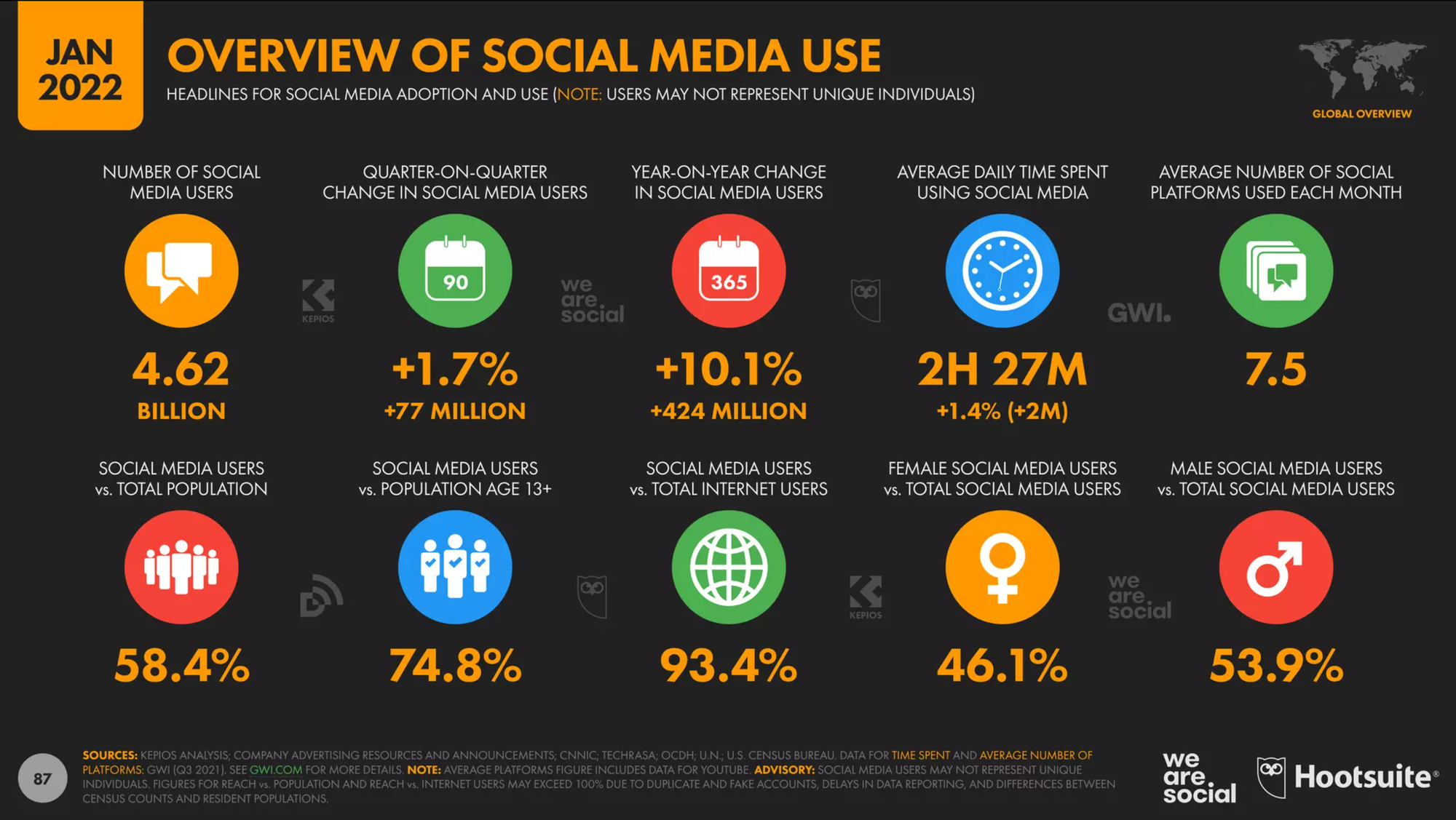

Take for example the innocent act of liking a social media post. As Sinan Aral pointed out in The Hype Machine (2020), some of the most widely used content recommendation systems on your smartphone take their behavioural cues— their explicit feedback — from likes and shares. Amplified through trillions and trillions of signals, this one-dimensional feedback signal has lead to some pretty strange emergent behaviour–in both recommender systems and in us humans.

And while there are laws against environmental pollution that make it illegal to dump toxic waste on public property, our social media platforms are–for better or for worse–private property (X being an extreme case these days). As a result, some of the most highly paid and impactful computer scientists and machine learning researchers in the world are working to optimise dark patterns. This is especially true for platforms that have historically generated the bulk of their revenue from ads such as Tiktok, X, Facebook, Youtube and Instagram.

With machine learning guiding our interactions with machines, we are also being programmed by our machines to like, think, and act a certain way.

To be clear, the same holds for any type of media or propaganda apparatus. The deliberately warped view of the world pushed to the general public by traditional media is well documented. That is how they hit their sales and subs targets after all. In an experiment by a Russian newspaper described here, they lost two thirds of their readers when they decided to only cover positive news for a day.

My contrarian take on all this is that the avalanche of social proof generated by our media consumption habits doesn't necessarily make the resulting version of reality better (or worse). It just makes it more prevalent–more common. That does not mean that we should accept the current state of affairs in which digital dominates social interactions as a given. This is neither the most sane or the most safe thing to do. In fact, I think that giving up control of our social glue to money-making machines as if it is an inevitable consequence of the future is a pretty bad idea. Yes, technological progress is happening at an enormous scale. The changes we are living through are probably bigger and more impactful than those of the Industrial Revolution. But as with any major technological change, their impact on human wellbeing is nonlinear, unequal, and can come with a lot of collateral damage.

Should we be delegating common sense to ML models?

But, you may ask, is letting algorithms run society actually a bad thing? Shouldn't more data lead to a more balanced and nuanced view? Shouldn't we just trust the machine? The main problem–as I see it–is that statistical models are reductive in nature. Their ability to represent reality by reducing it to a parameter grid is what makes them so powerful and elegant. As I pointed out earlier, they are only as good (for you) as their cost function. And if your try to define 'optimise society' or 'produce the biggest common good' mathematically, what data would you use as input?

Because the economics data we have at our disposal is severely lacking in both quality and quantity. It provides nowhere near the level of granularity needed to optimise a national economy. I don't keep track of Chinese state experiments with AI, but it looks like whatever algorithmically guided micro-optimisations of society they are doing are no match for the macro environment in which they operate (check out the ChinAI newsletter if you are interested in what's going on over there). A case in point, the biggest experiment to ever use algorithms at this level was probably communism, and we all know how that went.

And even if we had perfect data on the past and the present, I also believe that at the macro level we–imperfect, fallible, biased–humans should be in charge. Even if we develop online learning AI systems with real agency and more robust feedback mechanisms, the question is not how 'good'–as in optimised–their decisions are**. The question is, do we give them control over our future? Do we put them in charge of plotting our course as a society, economy, and species? Should we let them make value-based judgement calls? My answer, as you will have guessed, is a resounding no–we should restrict AI applications to use cases with lots of data readily available, robust checks and effective feedback mechanisms in place, and enough gains in efficiency to warrant their deployment.

Personal AI

So after all that, is there still room left for AI in our private lives? I think there is! There are a lot of ways in which data and machine learning can help boost your personal growth, productivity, and learning, and tailor personalised nutrition and healthcare in ways that no human or tool in our species toolbox can.

In these applications the benefits of using AI to enhance and optimise our human experience massively outweigh any drawbacks they will inevitably have. The main difference between personal AI systems and the current of generation unleashed on the world is that personal AI seeks to track and maximise specific individual goals rather than some vague corporate goal (looking at you, Meta). These systems are designed from the ground up to work in your benefit, rather than benefiting more from your freely given time and attention than you do from their service.

Going forward I plan to do a series of deep dives into the personal AI space, so if you're interested feel free to subscribe to this newsletter! I've previously written about personal AI in healthcare here.

*) This write-up is an updated and revised version of a 2020 blogpost originally published in the The Startup on Medium. The 2020 version is still available on the website of a freelance collective I used to be part of. I've since deleted the Medium account through which it was published on Medium, so the 2020 article is no longer available on there.

I felt the post was due an update with the ever growing role of conversational interfaces in our lives (i.e. ChatGPT and friends). The original post also framed Tiktok as an example of good (as in more useful to the user) UI and UX. I've since come to realise how harmful these kind of applications can be for the mental and physical wellbeing of their users.

**) If you are wondering what it would it be like to live in an algorithmically optimised society, the novel Gnomon by Nick Harkaway provides a pretty good idea.