Decision-Making Under Uncertainty: Lessons From AI

Are you sure you know what tomorrow looks like? You probably have a general sense of the day ahead, but can you predict exactly where you will be at 09:10 AM tomorrow morning? What about your feelings at said point in time? Or how you'll sleep overnight?

Even though there are a lot of things in life we can be certain about – the sun will rise tomorrow morning, and our planet will still be hurtling through space at breakneck speeds – our personal prediction window is actually quite narrow.

For a lot of things we just assume they will stay the same. This is something Kahnemann and Tversky captured in System I vs System II thinking. "System I thinking" is our default mode, where we assume everything remains the same, and System II is where we think about everything else–about long-term changes.

This is a useful formalisation, but as K&T have demonstrated in many of their experimental studies knowing our cognitive biases does not necessarily help us make better decisions. Neither does Nassim Taleb's key insight that our environment is uncertain.

So what gives? In what follows I want to discuss a couple of strategies for dealing with uncertainty that are applied when building AI systems. AI systems typically deal with automated decision-making at scales of tens to millions of decisions per day, and need robust checks in order to continue to function as intended.

Is there anything we can learn from AI that we can apply to our lives?

Let's find out.

A changing world

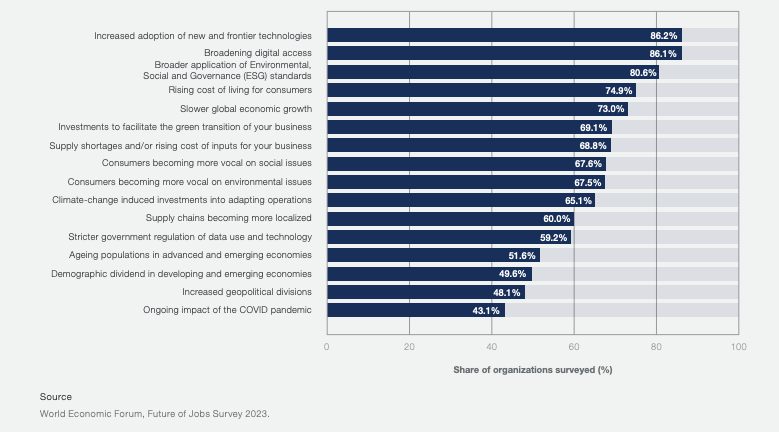

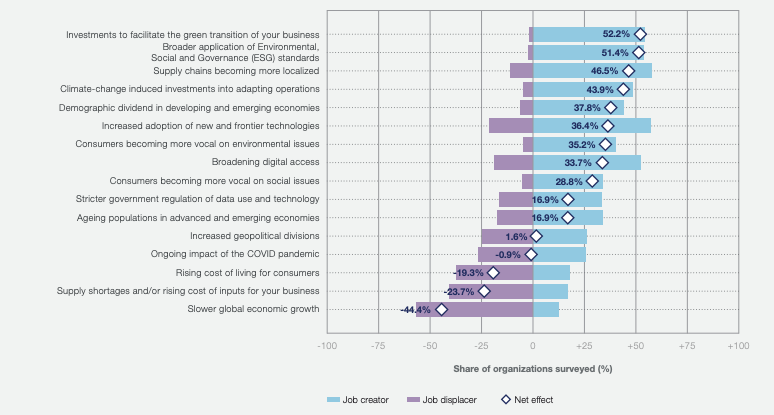

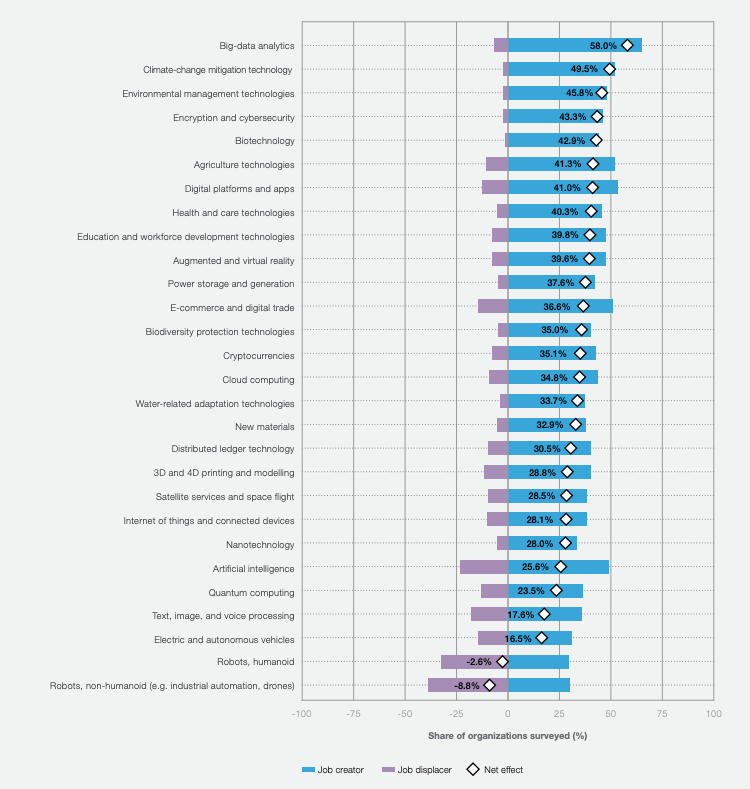

The key macrotrends driving business transformation identified by business leaders surveyed in 2023 (source: World Economic Forum Future of Jobs Survey 2023)

Just to state the obvious, we're entering uncharted territory when it comes to climate, society, technology and the economy.

Besides our rapid self-propelled (pun intended) exit from Holocene, humanity is facing rising inequality, a complete overhaul of the labor force and a nexus of technological advances is driving cost of production closer and closer to zero. All of these changes are ushering in an experience economy that is unlike anything the world has ever seen, rendering our existing economic and business models pretty much useless.*

That's a lot of factors to take into account when you're shopping for groceries.

So with all the uncertainty in the world around us, what can we do to make the best possible decisions not just for right now, but for our future selves?

We humans have a limited information window and processing bandwidth when it comes to making decisions. Nevertheless we are still superior to AI in at least one way–in the level of relevance of our information context for personal decisions.

So let's just assume for the sake of our argument that we know better what we want than, say, Amazon. How can we use this to our advantage?

Let's call this advantage our "based confidence"**, since it's based in our living experience. One way to apply this based confidence is to assume you're right until proven otherwise.

Perfect, right? We just listen to our gut! Intuition has gotten a bad rep anyway, it's time to change that.

... so we're done? Is their any way we can improve on always being right?

There might be! There is something called the OODA loop, which is widely used in AI systems: Observe, Orient, Decide, Act.. then rinse and repeat.

OODA FTW

Let's apply OODA to our shopping example. Say I'm standing in the dairy isle in my local supermarket, unsure about which milk to get. I could open my phone, search for reviews, and spend then next 10 minutes reading up on pros and cons of oat milk vs goat milk. Instead, I could also observe the variety of milk on display, pick one that feels right, and take it home to try out. Assuming manufacturers do their job and I don't get sick, this just saved me nine minutes in the dairy isle.

And if I didn't like the milk I bought, the information gathered this way will allow me to pick another brand of milk with greater confidence, since I've both narrowed down my options (by crossing out the milk I didn't like) and gained valuable intel on my milk preferences. All of this in 30 seconds.

There are of course decisions where the stakes are much higher and do-overs are very hard to come by. Think of buying a house, or of choosing who to marry.

It goes without saying that in these kind of high-stakes decision-making scenarios observing and orienting should be done with more care. It is however a mistake to think you will ever have enough information. As already mentioned, humans are inherently limited in the amount of information they can process–even with the help of AI assistants and all of the collective information mankind has gathered since the dawn of time at their fingertips.

So what can we do to improve the probability of a desired outcome for these kinds of high-stake decisions? For one, we should still systematically gather information about the things we are uncertain about. I'm not advocating for blind guesses–more information can definitely help guide us in our decision-making process.

However, in any decision-making scenario there will also be unknown unknowns–things we're overlooking, that are outside of our information context. In machine learning terms, the variables we didn't know (and might never know) existed.

Another term for unknown unknowns is epistemic uncertainty.

Dealing with epistemic uncertainty

Epistemic uncertainty–from the Greek ἐπιστήμη, knowledge or understanding–is us hitting the limits of our knowledge. Either at a personal or at a species level, this means we cannot rely on past experiences to guide our decisions. Here, gathering more information will actually hurt our decision-making.

So is there anything we can do to improve the outcomes of our decisions when faced with epistemic uncertainty? Let's look at a couple of strategies.

- Make your OODA loops as short as possible

- The shorter the timeframe between observation–orientation–decision–action cycles, the more often you can do a course correction. This principle is also applied in AI, where shorter feedback loops often lead to better performing systems.

- Use technology to your advantage: record your observations, decisions and actions to track back and learn from them. Again, data collection is one of the bedrocks of AI.

- Be prepared to change your decisions as new information becomes available. In AI terms, retrain your model (of the world).

- Choose your framing carefully

- Find the right way to frame your decisions. For example, by using an objective frame–system, action and information–you will be more detached from your decisions. In some cases, a subjective framing is of course preferred 😄 In AI, one way researchers think about framing is through representation learning.

- Make sure to distinguish between thoughts, reasons, actions and outcomes. This distinction is formalised in reinforcement learning as expectation, value, action and results.

- Stories matter, and the way we tell things to ourselves and others shapes our memories of said events. Use this to your advantage. An example from AI: if we frame a problem in terms of reinforcement learning, our system will learn to take the right action. If we frame it as a supervised learning problem instead, our AI system will learn to predict the optimal outcome.

- Plan for contingencies

- Identify the biggest areas of uncertainty that are relevant to your decision. In AI systems, a lot of work often goes into improving the robustness of deployed systems by adding more or more varied sources of data.

- Mitigate risks by developing worst-case scenarios. One way this is handled in AI is by deploying AI systems with guardrails–for example, by automatically blocking Large Language Models (LLMs) from generating hate speech.

- Always try to be at least directionally correct. You will be wrong a lot, but this doesn't matter as long as your right decisions outweigh your wrong decisions.

And finally, stay calm because things are changing–with or without you.

Notes

*) In the case of economic models, even more useless than they already were in Keynes' time. I've still to come across a single correct prediction by an economic model–if you know of any, let me know in the comments!

**) I don't think the concept of embodied cognition used in academic research does enough justice to our subjective experiences (it also shouldn't, of course–it's science, which is meant to be objective).