Scanning 150+ Websites with Cursor and LangChain

Anthropic recently released something they called Claude Computer Use (CCU) to showcase their latest LLM interacting directly with live websites. However awesome, CCU is much too slow to be practically useful.

I asked CCU to analyse the contents of a blogpost, and for the three out of four times the agent completed the task, it did so in 6-12 minutes. Given that the actual text on the blogpost is probably somewhere between 40-50 KB in size, it is clear that the CCU way of accessing information is suboptimal.

Because I needed to run analyses on UI and UX patterns of 150+ websites for a conference talk, I needed an alternative solution. I decided to use Cursor to generate a multi-agent system (MAS) in Python in the LangChain framework. The application to interface with the MAS is implemented in an open source Python framework, Streamlit.

You can find a video of the build here:

Generating the project code took me roughly 30m, although this was just a first version and I was also explaining the process for the Youtube video as I was working on it. Without working in presenter mode, I think I could get a complete project with correct URL resolution and updated prompts done in roughly 60m.

Some things to keep in mind when working with Cursor or any AI coding IDE:

- Iterate fast: try to get the project running as soon as possible before cleaning up the code. Spotting mistakes in generated code early will save you a lot of headaches down the line.

- Choose your LLM wisely: I've been using Claude 3.5 Sonnet to do all my coding in Python, React and TypeScript for the last few months and it's worked amazingly well. My experience with other LLMs has been much more of a mixed bag.

- Do your own software architecture design prior to starting a build. Check which versions of 3rd party dependencies you want to use, set clear guidelines for project design, and have a good idea of what you want the final application to look like. I've included my .cursorrules file with the preparatory work below as an example.

We are building an AI agent application in Streamlit with LangChain and LangGraph. Use the following Python libraries and versions:

langchain==0.3.7

langchain-anthropic==0.2.4

langgraph==0.2.44

anthropic==0.38.0

pandas==2.2.3

firecrawl==1.4.0

pillow==10.4.0

streamlit==1.39.0

If you are not sure about the libraries, ask the user.

The Streamlit app should be easy to use, and show the LangGraph workflow in the sidebar. While the agents are running, the screen should show the output of the LangChain agents.

Follow the latest best practices for designing and writing the LangChain agents and the LangGraph multi-agent system:

## Key Architectural Principles

- **Modularity**: Design systems with modular architectures to facilitate the integration of diverse agents with specific capabilities. This allows for easy updates and maintenance without disrupting the entire system.

- **Scalability**: Ensure that the system can scale seamlessly by adding more agents and handling increased communication loads effectively.

- **Interoperability**: Design for interoperability so that agents can interact with various platforms and legacy systems, enhancing their utility across different environments.

## Implementation Strategies

- **Define Clear Roles**: Assign specific roles to each agent to ensure specialization and efficiency in task execution. This approach leverages the strengths of each agent, allowing them to focus on particular tasks.

- **Utilize Robust Communication Protocols**: Establish secure and efficient communication protocols to facilitate information exchange and coordination among agents. This is crucial for maintaining system integrity and performance.

- **Start Simple and Iterate**: Begin with a simple setup of one or two agents and gradually introduce complexity. This approach helps in validating core designs and interaction patterns before scaling up.

## Task Decomposition and Collaboration

- **Task Decomposition**: Break down complex tasks into manageable subtasks that can be handled by specialized agents. This not only simplifies problem-solving but also allows for parallel execution, improving efficiency.

- **Collaborative Intelligence**: Encourage collaboration among agents to achieve results beyond the capabilities of individual agents. This is particularly useful for complex problems requiring diverse expertise.

For modularity, we want to pass state between nodes in LangGraph as much as possible to set the right context for the agents.

Here is the langgraph documentation: https://langchain-ai.github.io/langgraph/tutorials/introduction/

We are using the latest version of Claude 3-5 Sonnet for all the agents: claude-3-5-sonnet-2041022.

For internet search, use DuckDuckGo to get the information we need. Use Firecrawl to scrape websites.

Firecrawl can scrape both the text of a website and take screenshots. Decide which one to use based on the task.

Here is the Firecrawl documentation: https://docs.firecrawl.dev/sdks/python

For passing screenshots taken by Firecrawl to the Claude agents, use the following code:

```"messages": [

HumanMessage(content=[

{"type": "text", "text": "<custom prompt for the agent specific to the task>"},

{"type": "image_url", "image_url": {"url": f"data:image/{screenshot_image_format};base64,{screenshot_data}"}},

])

],```

Where screenshot_image_format and screenshot_data are the format and data of the screenshot (this is .png format for Firecrawl screenshots).

Use the context in the nodes in the prompts you write for the agents.

If you need to use a library that is not in the requirements.txt file, ask the user if you are allowed to install it.

To generate a first version of the project code, I used a functionality in Cursor called Cursor Composer with the following prompt:

I need an app that can take csv files with lists of website urls as input, and analyses the design and copy of the websites for UI and UX patterns. The website urls are all for companies that are building AI agents, and I want to get a summary for each website of the UI and UX patterns used by the company. This could mean that the agent needs to crawl not only the homepage but also the page with the actual agent or AI agent demo. After analysing all the individual websites, and writing the crawling output (text and screenshots) and analysis resutls to disk, user should be able to request a meta-analysis of the crawl and analysis results.These are the prompts generated by Claude 3.5 Sonnet for the two agents in the website analysis app:

You are a UI/UX expert analyzing an AI agent company's website.

Analyze the following website content and screenshots, focusing on:

1. Navigation and Information Architecture

- How is the site structured?

- Is the navigation clear and intuitive?

- How accessible is key information?

2. Visual Design Elements

- What is the overall visual style?

- How effective is the use of color, typography, and imagery?

- Is the design consistent with an AI company's brand?

3. Call-to-Actions and User Flow

- What are the primary CTAs?

- How clear is the user journey?

- Are there clear paths to conversion?

4. AI Agent Interface Design (if demo page available)

- How is the AI agent interface designed?

- What interaction patterns are used?

- How intuitive is the chat/demo experience?

5. Content Strategy and Messaging

- How well does it communicate the AI capabilities?

- Is the technical content accessible?

- What is the overall brand voice?

Provide specific examples and actionable recommendations for each area.```For the meta analysis AI agent:

You are a UI/UX expert analyzing patterns across multiple AI agent company websites.

I have analyzed {len(analyses)} websites:

{', '.join(urls)}

For each website, I have detailed analysis of:

- Navigation and Information Architecture

- Visual Design Elements

- Call-to-Actions and User Flow

- AI Agent Interface Design

- Content Strategy and Messaging

Based on the individual analyses, identify:

1. Common UI/UX Patterns

- What navigation patterns are most common?

- What visual design elements are trending?

- How do most sites handle AI agent demos?

2. Best Practices

- What approaches seem most effective?

- Which sites exemplify good UI/UX?

- What patterns should others adopt?

3. Areas for Improvement

- What common issues did you notice?

- Where could sites generally improve?

- What opportunities are being missed?

4. Recommendations

- What specific UI/UX recommendations would you make?

- How could sites better showcase AI capabilities?

- What patterns should become standard?

Provide specific examples from the analyzed sites to support your findings.

Individual site analyses:

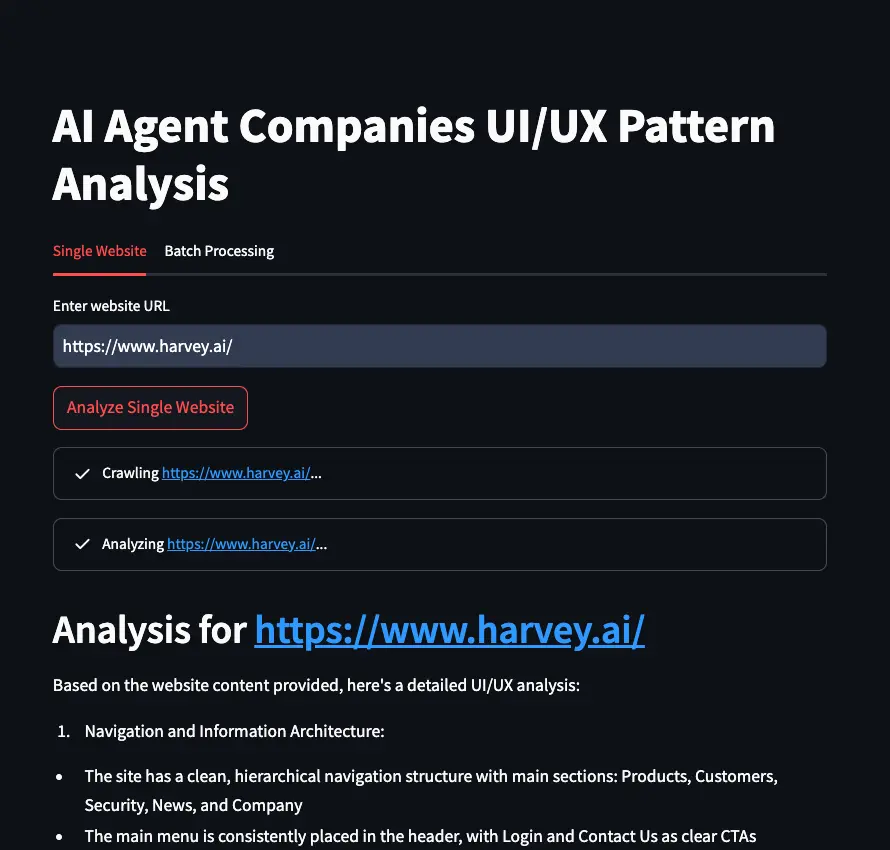

{chr(10).join(f'--- {a["url"]} ---{chr(10)}{a["analysis"]}{chr(10)}' for a in analyses)}For more details on the build, watch the YouTube video! Below is a screenshot of the Streamlit app generated by Claude 3.5 Sonnet in Cursor.

If you're curious, the Youtube recording of the conference talk can be found here: