I'm a machine learning engineer. Will AI take my job?

Okay, so here's the thing.

As a machine learning engineer I build AI applications for a living.

Now this generative AI thing has come along and it is able to write code at higher speeds and lower costs than a human ever could.

So.. am I at risk of being replaced? Is this our Dr. Frankenstein moment?

I've always enjoyed automating myself out of tasks, projects and clients.

But automating myself out of a profession is a big step, even for me.

Just a couple of weeks ago Cognition AI released Devin, which could autonomously complete 14% of the issues on the SWE-bench benchmark dataset.

Which begs the question, how bad is it exactly? Should I be looking for a new job?

Tickets, tickets, tickets

Going by percentage points – Devin completed just 14% of the coding tasks – and the amount of tasks in a typical machine learning project that are actually coding tasks*, I probably don't have to enroll in that Italian barista course just yet.

This is also something Gergely Orosz posits in The Pragmatic Engineer. Devin, despite the hype, is currently nothing more than another AI coding assistant.

And after using Github Copilot for a year, I've at no point felt threatened by it.

Github Copilot is great for writing boilerplate code, but for most of anything else I still rely pretty much on my own judgement and experience.

Then there's also the matter of the cloud environments I usually work in.

I'd love it if some tool would come along and automate away all of the manual setup and configuration work needed to run code on cloud infrastructure.

In fact, ease of setup (compared to on-premises data centres) was one of the main premises for a lot of multimillion dollar corporate cloud migrations.

But truth be told, hipster movements like serverless and Kubernetes have only increased deployment and maintenance efforts for software infrastructure.

Yes, we have greater flexibility, but I'm not sure the increase in effort has been proportional to the gains.

Infrastructure as code takes away some of the pain of provisioning code on the cloud, but it's often still slow going even for experienced cloud engineers.

I don't see generative AI by itself solving these deployment and operations issues anytime soon. Although – note to AWS, GCP, and Azure – a cloud-native AI-assisted IDE with embedded infrastructure provisioning capabilities would go a long way in reducing the deployment effort.

Then there is the issue of code quality.

A recent study by Gitclear found that a lot of the boilerplate code generated by generative AI assistants is hard to maintain and riddled with errors.

They determined this by measuring rates of "code churn" – the number lines changed after code has been committed, which has risen with almost 40% YoY from 2022 to 2023.

In their own words,

In 2022-2023, the rise of AI Assistants are strongly correlated with "mistake code" being pushed to the repo. If we assume that Copilot prevalence was 0% in 2021, 5-10% in 2022 and 30% in 2023 ... the Pearson correlation coefficient between these variables is 0.98 (...). Which is to say, that they have grown in tandem. The more Churn becomes commonplace, the greater the risk of mistakes being deployed to production. If the current pattern continues into 2024, more than 7% of all code changes will be reverted within two weeks, double the rate of 2021. Based on this data, we expect to see an increase in Google DORA's "Change Failure Rate" when the “2024 State of Devops” report is released later in the year, contingent on that research using data from AI-assisted developers in 2023. – Harding & Kloster (Gitclear), Coding on Copilot

This speaks to my experience. I often use Copilot to generate a first draft, and then need to clean the generated code before it actually does what it is supposed to do.

Less experienced developers relying more heavily on AI coding assistants will probably push generated code as-is more often, leading to the trend discussed by Harding & Kloster.

It seems that at some point the conversational interface of these LLM-powered AI assistants breaks down and it takes more time talking to the coding assistant than it would to actually write the code.

Maybe natural language isn't the correct medium to describe machine instructions to machines.

If only there were a better way...

Is the generative AI gold rush a fools' errand?

So from where I stand the ML engineering profession is probably safe from AI coding assistants, at least in the near term.

There is however another issue. One much more serious.

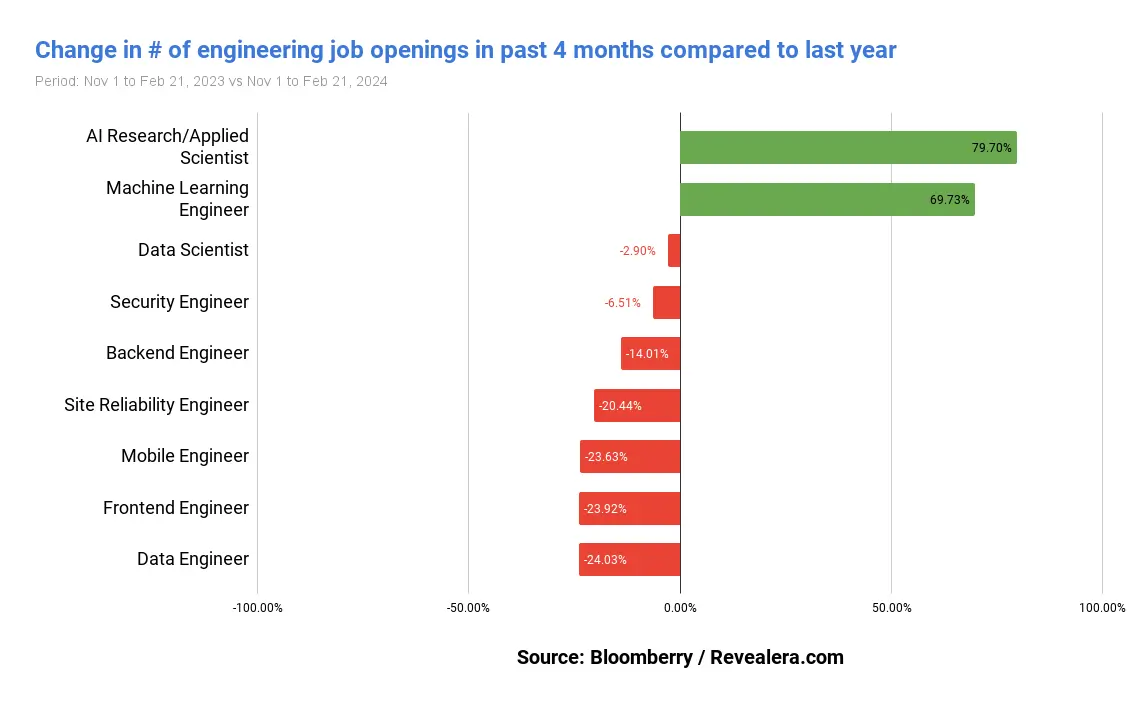

And that is the hordes of software engineers from different engineering lines flocking the ML space, which has gone white-hot with the hype caused by recent advances in generative AI.

This gold rush could potentially spark a global race for the bottom in terms of financial compensation.

The field was already quite stretched, with tech giants readily paying seven figure salaries for AI personnel while some entry-level ML engineering gigs barely pay above their respective countries' minimum wages.

The huge influx of new recruits cased by the genAI hype has worsened this trend:

So the bad news is if you’re thinking of switching gears and transitioning to becoming a machine learning engineer, there’s lots of competition for relatively few job openings, compared to the rest of software engineering. – Bloomberry, How AI is disrupting the demand for software engineers

In the long term, this will probably be less of an issue because of the huge waves AI is making in the corporate space.

The 2023 WEF future of jobs report showed that one of the top priorities for a lot of companies is re-skilling their employees with AI and big data skills:

And a March 2024 survey from the a16z genAI beat also showed that companies are doubling down on generative AI investments in 2024, with budgets for genAI initiatives coming from a variety of different business lines.

But, ironically, it's still the success – or at least, the rise in popularity and public perception – of these generative AI applications that has lead to a minor and hopefully short-term crunch in ML engineering job openings.

So while fully autonomous AI coding applications are still miles away (although, don't quote me on this), the rise of generative AI has caused quite a bit of upheaval in the world of ML engineering. And not always for the right reasons.

Bonus content: will AI take all our jobs?

I've recently uploaded a video on Youtube that tries to answer the same question for all the other jobs that make up the global economy.

You can find the video here:

*) There are several ML research streams working on automating another part of machine learning project work – designing and developing ML models. While there have been some impressive advances in AutoML subfields such as NAS (Neural Architecture Search) and Meta Learning (e.g., MaML), the approaches developed have historically been either prohibitively expensive in terms of compute or not suitable to deployment in business settings.